Projects

Here is a selection of recent projects spanning experimental biomechanics, musculoskeletal modeling, finite element analysis, machine learning, computer vision, robotics, data science, controls, and geospatial analysis. This portfolio reflects my interdisciplinary approach to understanding human movement, developing intelligent systems, and applying computational methods to solve engineering challenges.

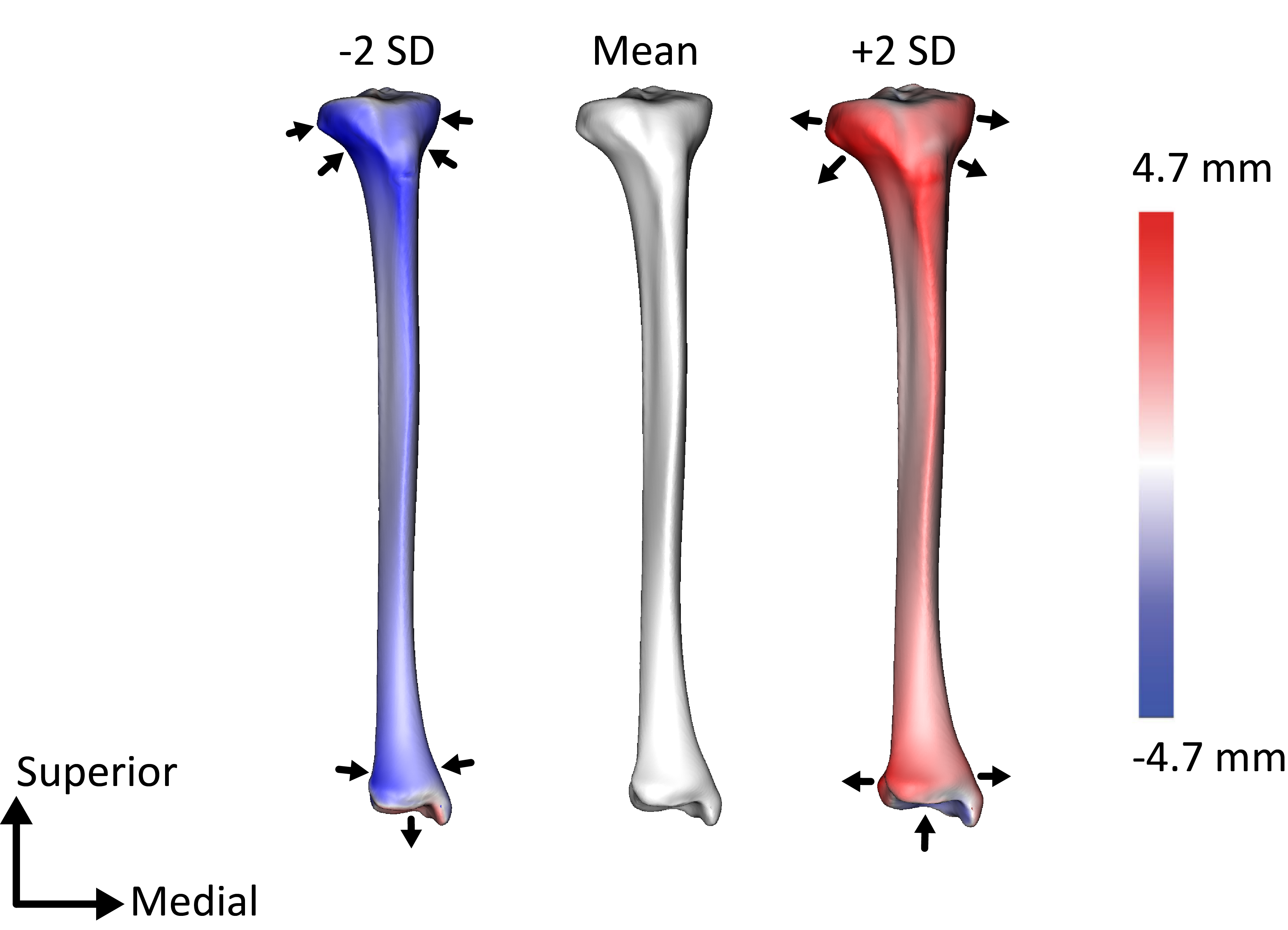

Statistical Shape Modeling of the Tibial Cortical Morphology

Specimen-specific fixtures for cadaveric testing are often designed manually, without reliable anatomical data to ensure proper biomechanical alignment. I streamlined this process by analyzing over 100 lower limb CT scans to generate 3D tibial models, then applying statistical shape modeling (SSM) and PCA to quantify morphological variability. I used MATLAB, Python, ShapeWorks, and Materialise Mimics/3-Matic to characterize key anatomical trends, including alignment, torsion, and geometric differences, and developed a tibial shape modeling framework to guide biomechanically informed fixture design.

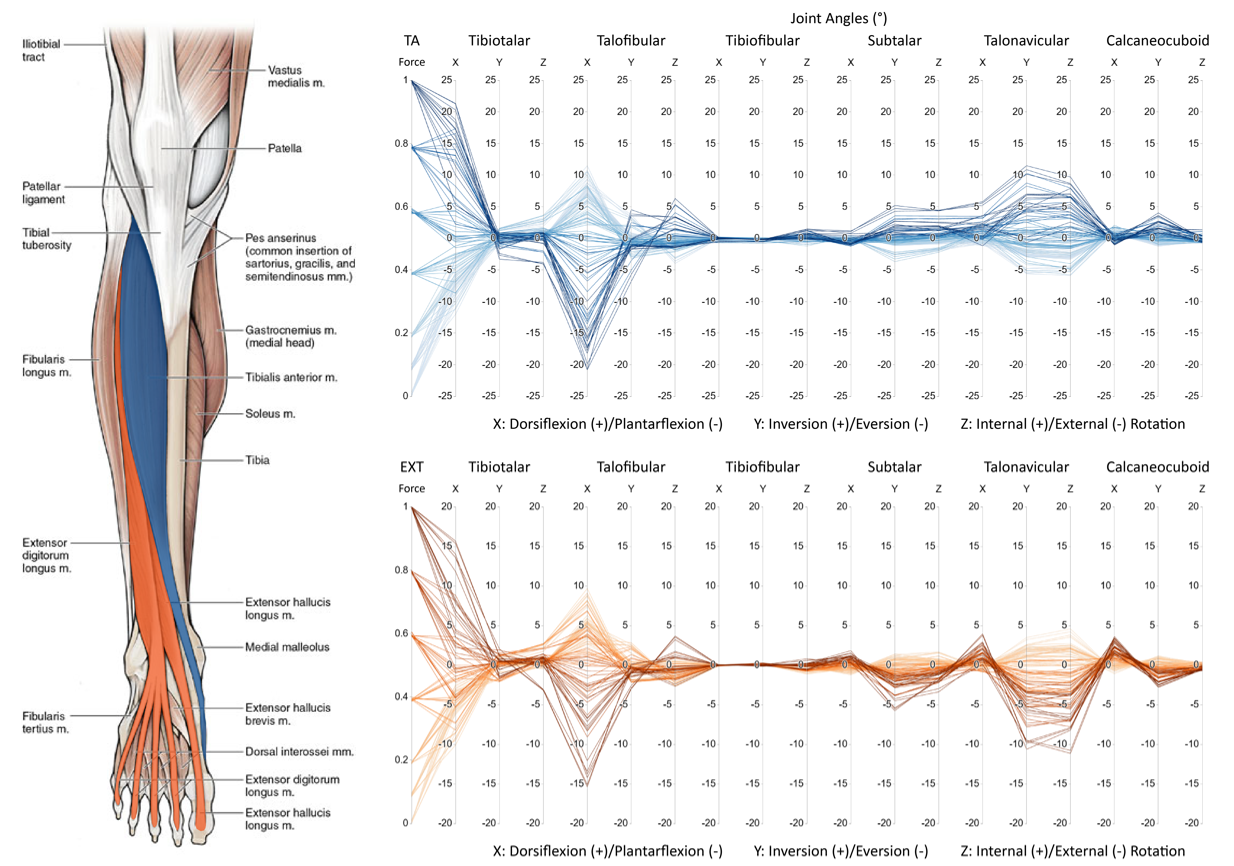

Characterizing the Relationship Between Muscle Activity and Talocrural, Subtalar, and Midtarsal Joint Kinematics

To understand how individual extrinsic muscle activity influence ankle joint complex motion, I conducted cadaveric experiments using a robotic tendon force actuator system to independently load six muscle groups and captured 3D joint kinematics with bone-mounted optical markers. I used PCA and joint-specific kinematic modeling to identify muscle-driven motion patterns and shared coordination across the talocrural, subtalar, and midtarsal joints. I used LabVIEW, Python, MATLAB, CT-based segmentation, and motion capture tools to collect and process high-fidelity biomechanical data that can improve musculoskeletal models and support surgical applications.

Fine-Tuning Pre-Trained Faster R-CNN to Reduce False Positives in Guardrail Damage Detection from Dashcam Images

I built an automated computer vision pipeline to detect highway guardrail damage, aiming to replace slow, manual inspections with a scalable, data-driven approach. Using a pre-trained Faster R-CNN model, I developed a supervised fine-tuning workflow that incorporated focal loss for class imbalance, extensive data augmentation, custom anchor design, and post-processing steps such as DBSCAN clustering and Soft-NMS. I also introduced a saliency-based scoring method to evaluate annotation quality. This system reduced false positives from 2.84 to 0.25 per image while preserving precision and recall, demonstrating strong potential for broader infrastructure monitoring. The project was implemented in Python and PyTorch, leveraging tools such as Faster R-CNN, YOLO, and saliency map analysis.

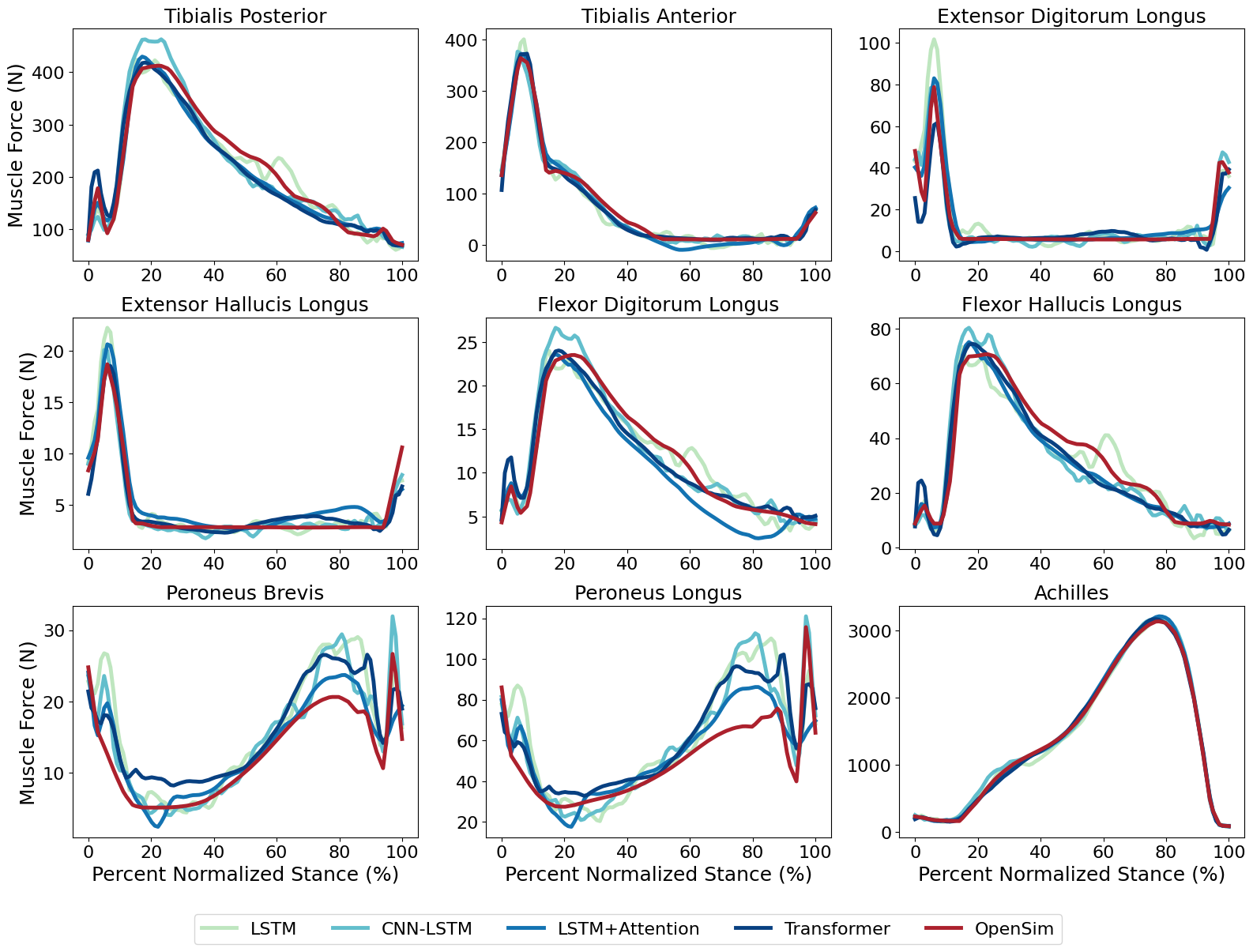

Predicting Lower Limb Muscle Forces from Ground Reaction Forces During Gait Using Sequence and Attention-Based Deep Learning Models

I developed deep learning models to predict lower limb muscle forces directly from ground reaction forces (GRFs), providing a way to assess muscle-level biomechanics without motion capture or EMG. I trained LSTM, CNN-LSTM, attention-enhanced LSTM, and Transformer architectures on 13,000 gait cycles and compared their predictions with ground-truth forces generated through an OpenSim static optimization pipeline. GRFs alone were sufficient to recover detailed muscle force profiles, with the Transformer performing best (MAE 13.69 N, R² = 0.996). This work highlights the potential for portable force-plate systems to deliver clinically meaningful biomechanical insights outside traditional laboratory environments. The pipeline was implemented in Python and PyTorch, with OpenSim used for musculoskeletal modeling.

Cardan Sequence Selection Influences Subtalar and Talonavicular Joint Kinematics

I investigated how different Cardan angle sequences influence the interpretation of 3D foot and ankle joint kinematics, an important issue for joints with complex multiplanar motion. I compared six Cardan sequences across five joints of the ankle joint complex using in vivo biplane fluoroscopy gait data and in vitro robotic cadaveric simulations. Proximal joints showed consistent kinematics across sequences, while the subtalar and talonavicular joints demonstrated large sequence-dependent differences that can introduce kinematic crosstalk. This analysis produced joint-specific recommendations for choosing rotation sequences that improve clinical relevance and reproducibility. I used MATLAB, LabVIEW, CT-based segmentation, motion capture data, and statistical parametric mapping (SPM) to evaluate methodological effects in foot and ankle biomechanics.

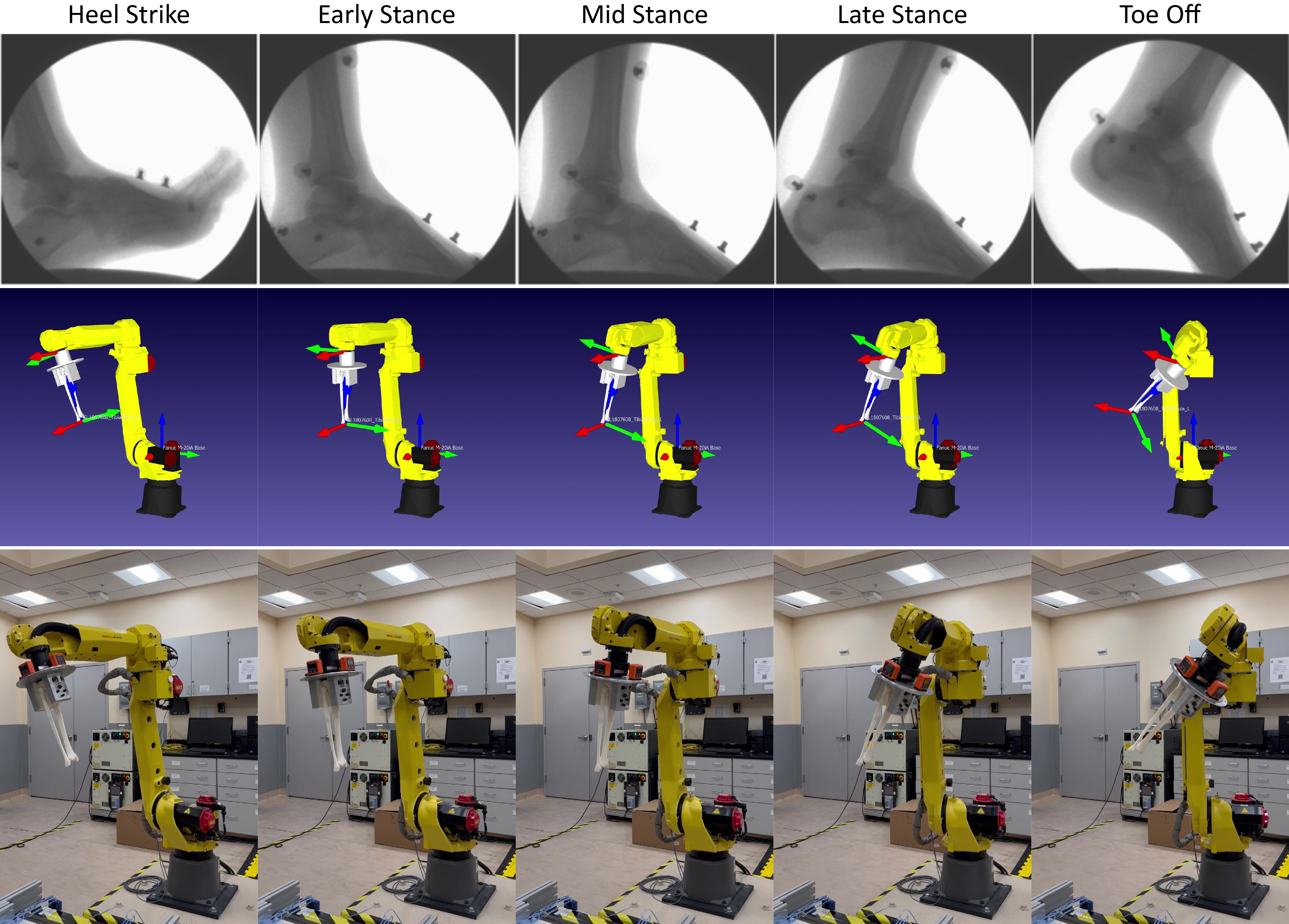

Passive Ankle and Hindfoot Kinematics within a Robot-Driven Tibial Movement Envelope

I quantified the passive motion of ankle and hindfoot joints to understand how these joints adapt during tibial movement and how they contribute to overall foot and ankle mobility. I designed and executed cadaveric experiments using a six-axis industrial robotic manipulator that applied controlled tibial motions with underfoot perturbations, then captured bone-level kinematics with CT-based models and motion tracking. Statistical analysis showed that hindfoot joints adapt considerably during dorsiflexion, plantarflexion, and rotational movements, while coronal-plane motions remain relatively constrained. These findings help clarify the passive mobility and stabilizing roles of the hindfoot. I used MATLAB, LabVIEW, motion capture tools, CT segmentation, and SPM.

Exploring Text Classification for Predicting Trial Outcomes in Old Bailey Proceedings

I explored how machine learning models could predict trial outcomes from the historic Old Bailey court transcripts using the Kaggle dataset. I implemented several foundational algorithms from scratch in Python and NumPy, including ID3, Perceptron, SVM, and logistic regression, then built a neural network in TensorFlow/Keras to capture nonlinear patterns in the text. After feature engineering and extensive preprocessing, the neural network achieved 81 percent accuracy on the evaluation set, outperforming the classical models and ranking 11th out of 142 students. The project highlights end-to-end model development, from algorithmic implementation to tuning and evaluation.

Towards an Autonomous Surgical Retraction System via Uncertainty Quantification

We investigated how to quantify uncertainty in learned surgical soft-tissue manipulation policies within the DeformerNet framework. We implemented and compared two uncertainty estimation methods: deep ensembles and Monte Carlo dropout in PyTorch. Deep ensembles provided the most reliable uncertainty signals, with ensemble variance clearly separating successful manipulations from failed ones. This work highlights the value of uncertainty-aware learning in surgical robotics and supports safer, more interpretable autonomous systems.

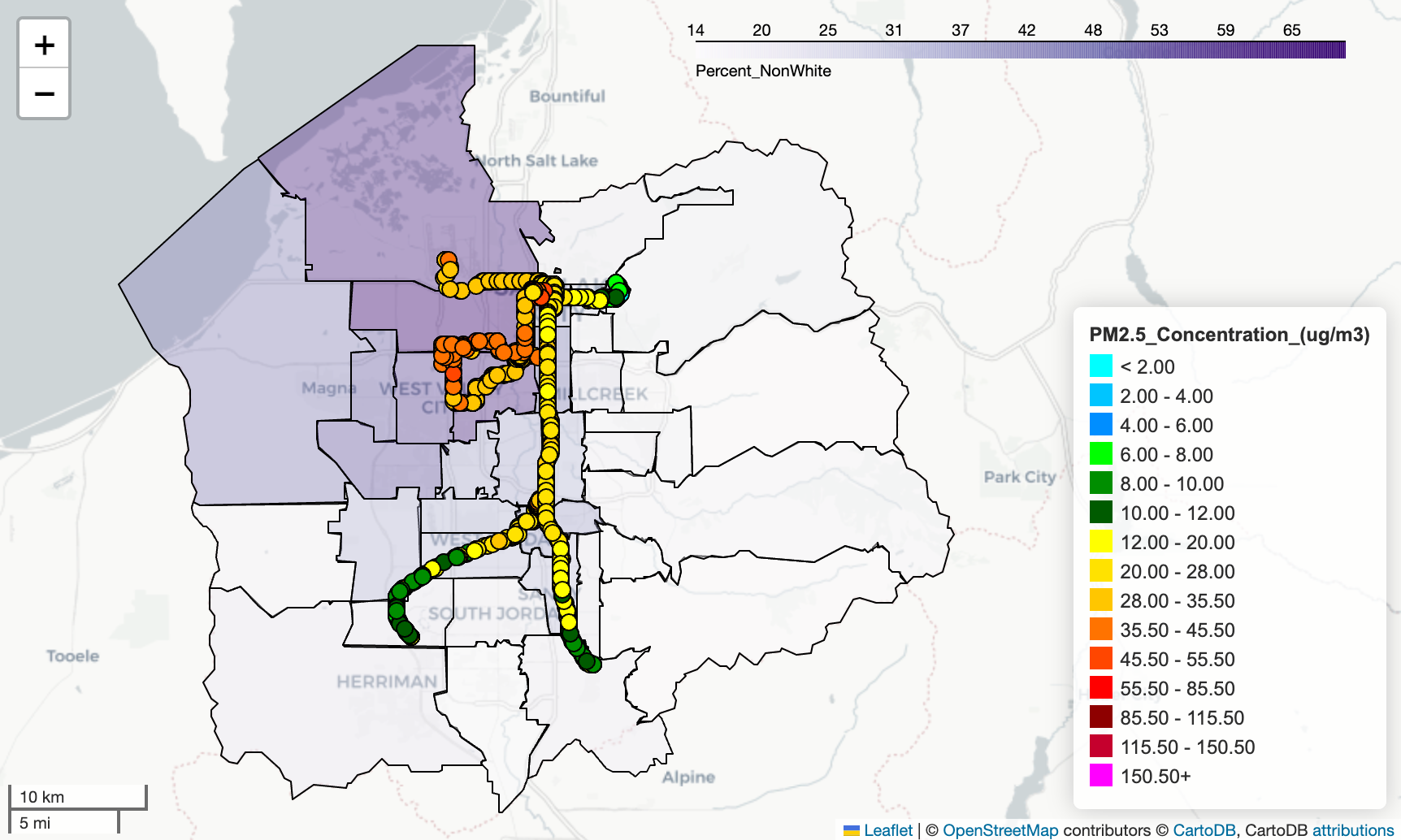

Mobile Air Quality Monitoring in the Salt Lake Valley

I analyzed mobile air quality data across the Salt Lake Valley to understand how pollution exposure varies by neighborhood and how those patterns relate to socioeconomic factors. Using geospatial datasets collected from sensors mounted on light rail cars and e-buses, I mapped pollutant concentrations and linked them with demographic metrics aggregated by ZCTA. The analysis revealed clear disparities in exposure, with west-side communities experiencing consistently poorer air quality. The project combined geospatial analysis, data visualization, and statistical correlation using tools such as Python, GeoPandas, Pandas, Matplotlib, Contextily, Folium, and scikit-learn.

Replicating In Vivo Tibial Motion with a 6-Axis Industrial Robotic Manipulator

I recreated human tibial gait motion in a physical experimental setup by driving a 3D-printed tibia with an industrial 6-axis robotic manipulator. Using biplane fluoroscopy data as the reference motion, I processed the in vivo kinematics and programmed the robot to reproduce the trajectory with high fidelity. This system enabled accurate replication of tibial gait for validating motion capture methods and supporting downstream biomechanical studies. The workflow combined Python, RoboDK, ROS, and custom 3D-printed hardware to integrate robotics and human movement data.

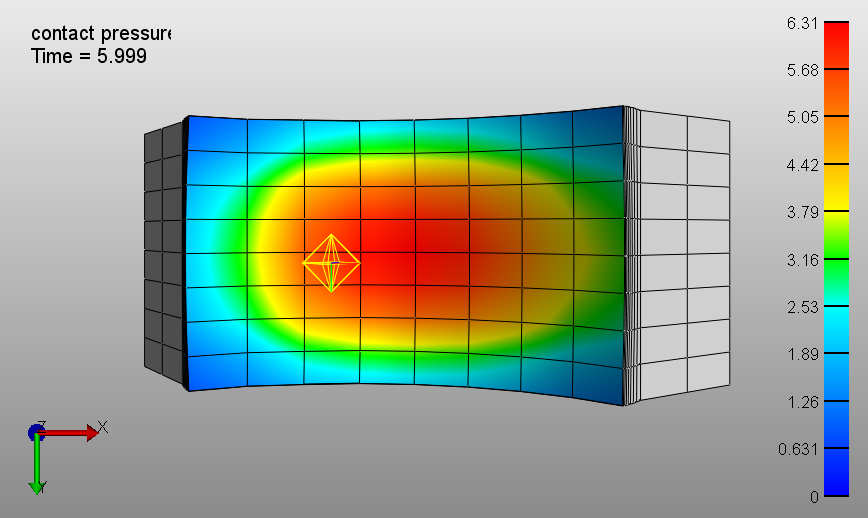

Finite Element Model of Biphasic Contact in the Tibiotalar Joint

I built finite element models of the tibiotalar joint to study how differences in bone and cartilage morphology affect mechanical contact behavior in both healthy and osteoarthritic conditions. Using FEBio and MATLAB, I simulated biphasic-on-biphasic contact mechanics across multiple anatomical variants, allowing me to quantify changes in joint loading and pressure distribution. These analyses provided insight into how morphological variation may contribute to OA progression and informed future directions for joint mechanics research.

Monte Carlo Simulation of the Monty Hall Problem

I used Monte Carlo simulation to explore probability and decision-making in the classic Monty Hall problem. By repeatedly simulating the three-door game scenario in Julia, I quantified how often a contestant wins when switching versus staying. The results reproduced the well-known but unintuitive finding that switching doors nearly doubles the chance of winning, increasing the probability from about 33 percent to 66 percent. The project served as a simple but powerful demonstration of probabilistic reasoning and simulation-based analysis.

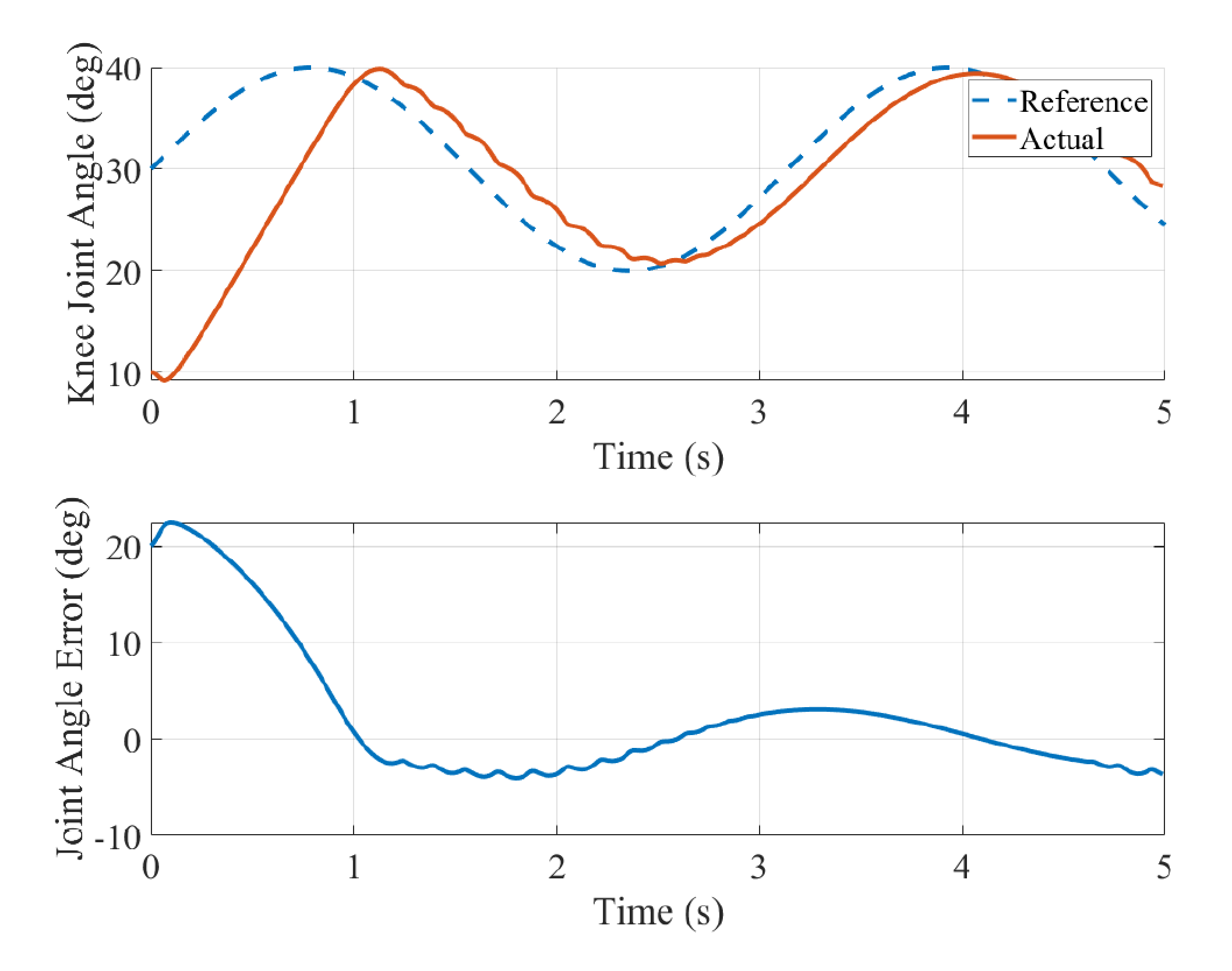

Stability Analysis of a Nonlinear Model Predictive Controller for Functional Electrical Stimulation

We explored control strategies for musculoskeletal models by analyzing the stability and performance of a nonlinear model predictive controller (NMPC) during simulated leg extension tasks. We used Lyapunov stability analysis and targeted PID tuning to evaluated how different control approaches influence movement accuracy and system robustness within an OpenSim musculoskeletal model. This work demonstrated that appropriately tuned controllers can substantially improve stability and tracking performance, providing insight into how advanced control methods may support rehabilitation or assistive device design.

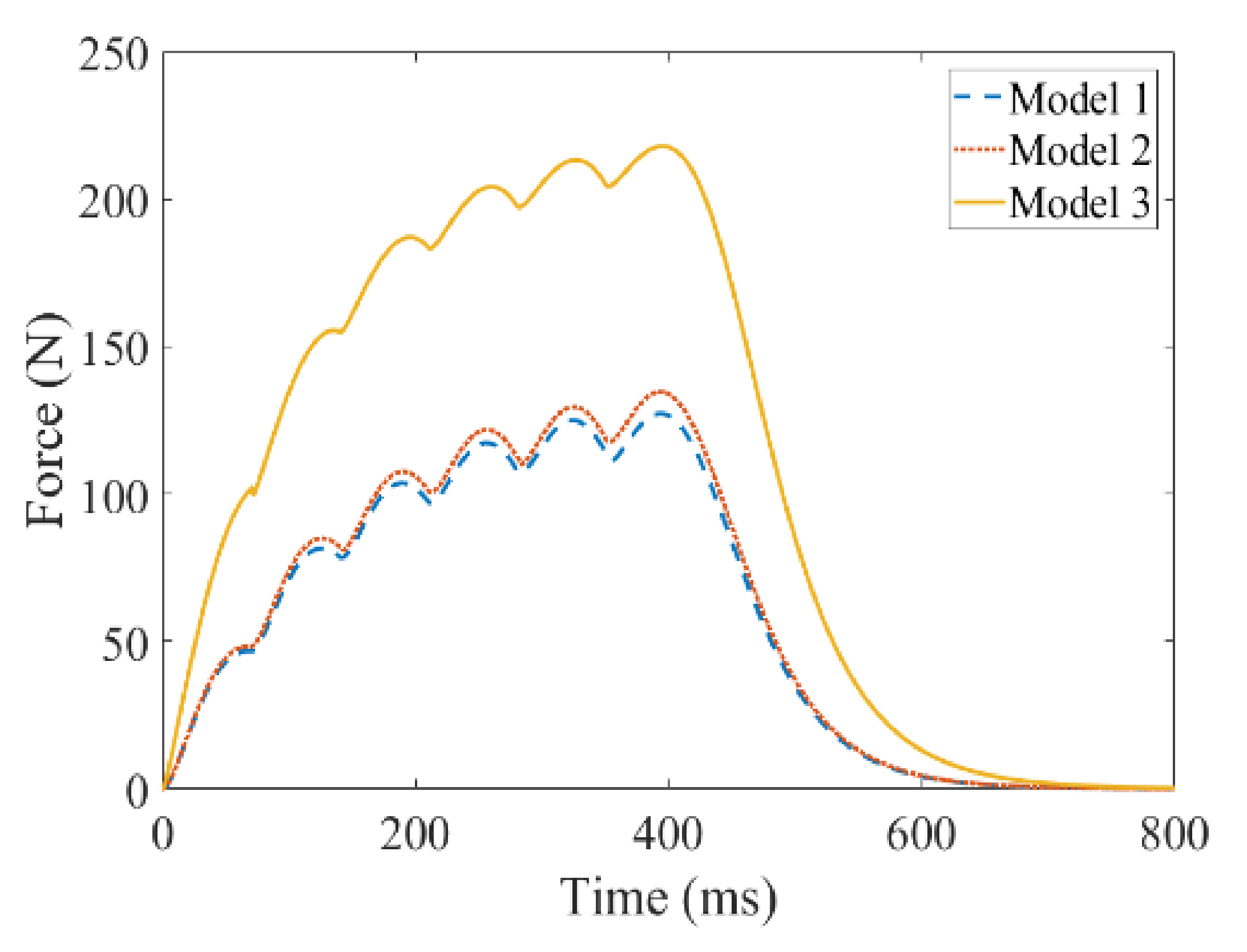

Implementation and Examination of a Mathematical Model for Predicting Muscle Force and Fatigue

I built computational models to simulate isometric muscle force generation and fatigue under different physiological conditions. Using MATLAB’s ode45 solver, I implemented differential equation–based muscle models and ran sensitivity analyses to understand how key parameters influence force output and fatigue behavior. This work helped identify the factors that most strongly shape muscle performance, offering a foundation for applications in biomechanics, rehabilitation, and muscle function prediction.

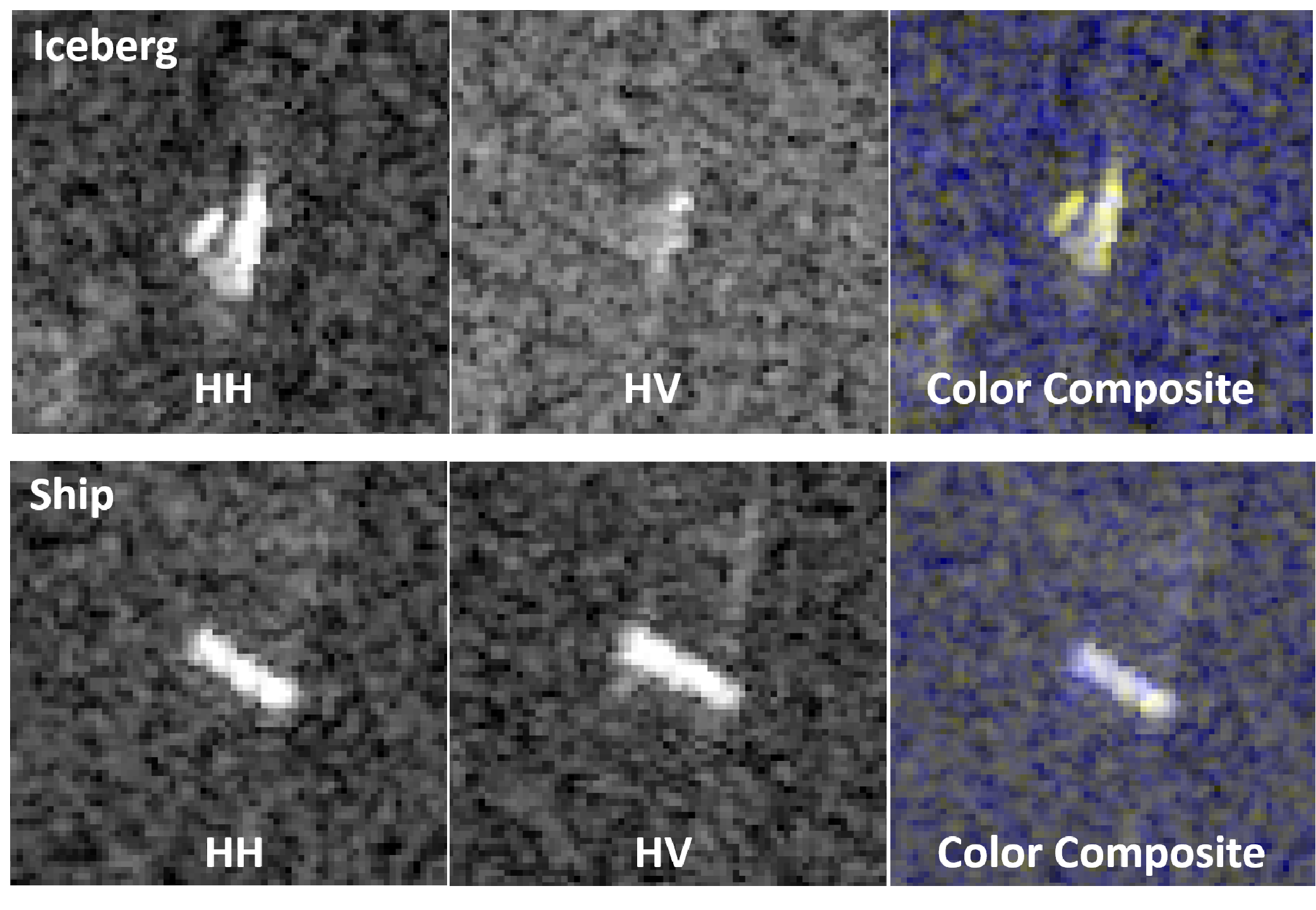

Implementation of Convolutions Neural Networks for Iceberg Classification in Satellite Radar Data

We developed a convolutional neural network (CNN) to classify satellite radar images as either ships or icebergs for remote sensing applications. We trained and evaluated the model on the Kaggle dataset using Tensorflow/Keras, ultimately achieving 87 percent accuracy on the test set. This project demonstrated the effectiveness of CNNs for subtle image discrimination tasks and strengthened my experience in deep learning, computer vision, and model evaluation.

Semi-Autonomous Mobile Robot for Jenga Gameplay

We built a custom mobile robot with a 5-axis manipulator to explore autonomous navigation and object-handling capabilities. The system combined 3D-printed hardware, DC motors, LIDAR, IMUs, and Raspberry Pi microcontrollers, with a C++ control pipeline that fused LIDAR-based mapping with user-guided manipulation for tasks like picking Jenga blocks. The final prototype demonstrated reliable autonomous navigation and semi-autonomous manipulation, serving as a full end-to-end example of robotic system design, sensing integration, and embedded control.

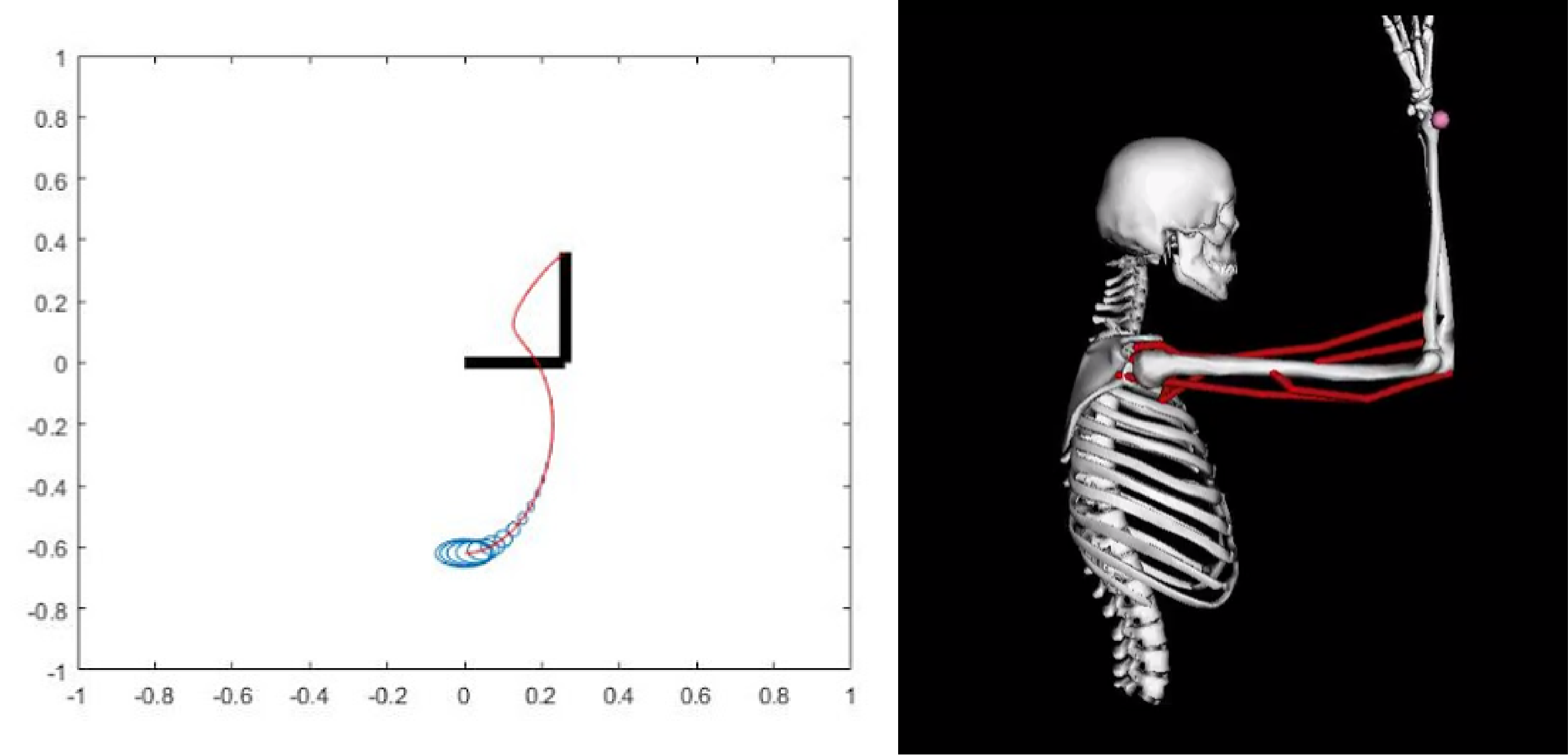

Trajectory Optimization of Human Arm Reaching Model in OpenSim

We explored optimal control strategies for human arm movement using a biomechanical simulation built in OpenSim. We implemented the iterative Linear Quadratic Regulator (iLQR) algorithm to generate efficient reaching trajectories in a sagittal-plane arm model, tuning the controller to balance accuracy and effort. The project demonstrated how iLQR can produce smooth, stable motion plans for musculoskeletal systems and highlighted its potential for analyzing neuromuscular control and informing rehabilitation or robotic-assistive applications. The workflow was developed in MATLAB and OpenSim.

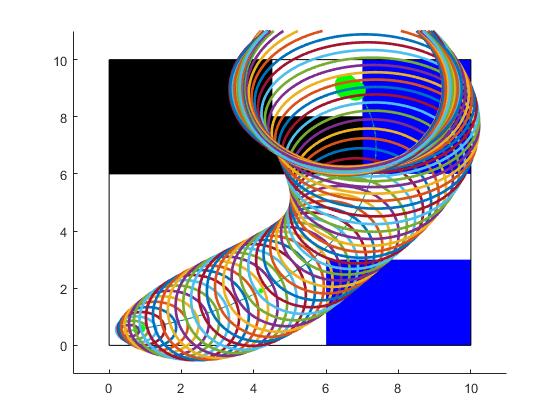

Safe Feedback Motion Planning with Unknown Dynamics for Car Model in MATLAB

We worked on improving motion planning for mobile robots by combining stochastic trajectory optimization with Linear Quadratic Regulator (LQR) feedback control. After implementing the optimization routine in MATLAB, we layered LQR stabilization on top of the planned trajectories to make the robot more robust to disturbances and modeling error. This hybrid approach produced smoother, more reliable paths and demonstrated how feedback control can meaningfully strengthen planning algorithms in uncertain environments. The full workflow was developed and tested in MATLAB.